Adding Custom Blocks to Snap! For AI Programming

Enabling children to build AI programs…

Machine Learning and Artificial Intelligence (AI) Programming for Children

The year 2017 saw a sudden emergence of interest and support for Artificial Intelligence (AI) programming by children.

Stephen Wolfram wrote a chapter in his An Elementary Introduction to the Wolfram Language textbook about machine learning. He wrote a blog post Machine Learning for Middle Schoolers, where he describes the rationale behind the different examples and exercises.

Dale Lane launched Machine Learning for Kids, which he recently described in his excellent article in Hello World 4.

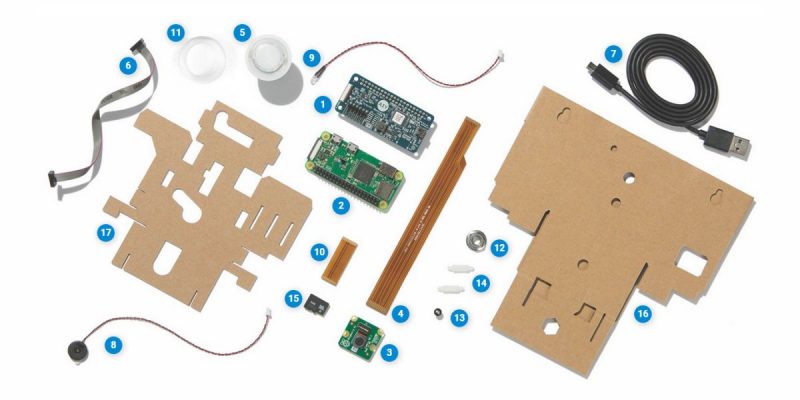

Google launched the first of two AIY projects for voice and vision kits for Raspberry Pi projects.

Google also launched the Teachable Machine and a collection of browser-based machine learning experiments.

The idea that children should be making AI programs goes back at least to the 1970s. I wrote a paper on this in 1977 called Three Interactions between AI and Education published in Machine Intelligence 8.

Seymour Papert, Cynthia Solomon, and others at the MIT Logo Group saw many potential benefits from students doing AI projects. One can learn about perception, reasoning, language, psychology, and animal behaviour in the process of building perceptive robots and apps.

It provides fertile ground for self-reflection – students may begin to think harder about how they see and hear, for example. Students may be better motivated because their programs are capable of impressive behaviour. Today, we can add the motivation that students will learn about a technology that’s of increasing importance in science, medicine, transport, finance, and much more.

Adding Custom Blocks to Snap! For AI Programming

Forty years later, I returned to my interest in supporting AI programming by children.

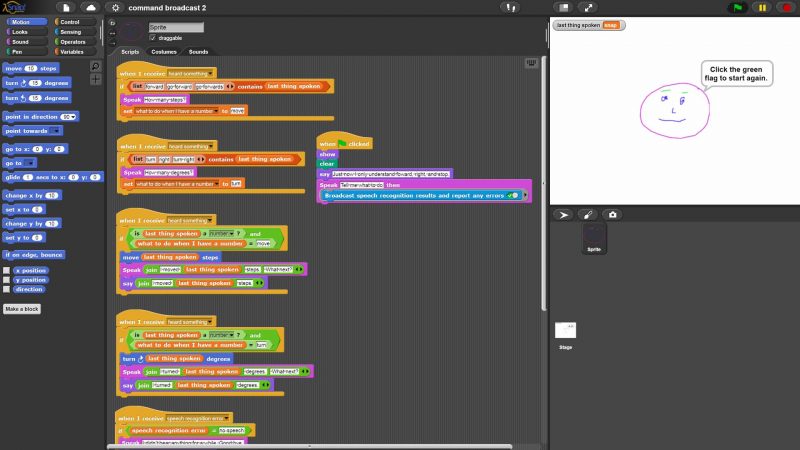

The idea initially was to add custom blocks to Snap! that used AI cloud services to provide speech input and output and image recognition. Speech synthesis and recognition is now provided free by the Chrome browser. Blocks to speak or listen were created that interfaced to these APIs.

Image recognition services are provided by Google, Microsoft, IBM, and others, but only to those who supply an ‘API key’ in queries. Fortunately, these can be easily obtained and allow for at least 100 free queries per day.

Machine Learning Blocks for Snap!

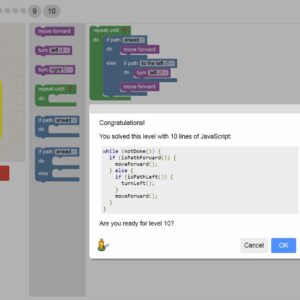

Inspired by Dale Lane’s addition of machine learning to Scratch and Google’s Teachable Machine, I then added machine learning blocks to Snap!.

Currently, only camera input is supported. The addition of audio, images, text, and data is planned.

Thanks to Google’s efforts on deeplearn.js, this runs fast within the browser by using its GPU. No servers needed.

Sample Snap! AI programs include:

- Listen to generated speech with random pitch, rate, voice, and language

- Speak single word commands to a sprite (with synonym support)

- Speak full sentence commands to a sprite (with keyword search)

- Create funny sentences by verbally answering questions

- Customise stories by verbally answering questions

- Ask questions of Wikipedia

- Listen to a description of what is in front of the camera

- Listen to a description of what is in front of the camera in response to you speaking

- Train a turtle to move depending on which way your finger is pointed

- Train a turtle to move depending on the voice commands you give

- Train a turtle to move left or right depending on which way you lean

- Play Rock Paper Scissors using Machine Learning

Links to the new Snap! commands, sample projects, and a teacher guide to AI programming in Snap! are all freely available at Enabling children and beginning programmers to build AI programs. No installation required.

We’re eager to hear the experiences of teachers who try out any of this, and to answer any questions you have.

Ken Kahn is a senior researcher at the University of Oxford. The work described here was supported by the eCraft2Learn project funded by the European Union’s Horizon 2020 Coordination & Research and Innovation Action under Grant Agreement No 731345.

Originally published in Hello World Issue 5 : The Computing and Digital Making Magazine for Educators. Above article includes modifications from the original article by Ken Kahn. License CC BY-NC-SA 3.0

Kindly help me to download snap and then add ai blocks